You’ve talked to it. You’ve yelled at it. You’ve probably thanked it for reminding you about a dentist appointment you still skipped.

That voice in your phone, that chat bubble on the site, that polite-but-creepy customer service rep who never sleeps?

That’s conversational AI.

And it’s not coming—it’s already here, living rent-free in your day-to-day interactions.

Like What You Read? Dive Deeper Into AI’s Real Impact.

Okay, But What Is Conversational AI?

This is the tech that turns machines into talkers, built to mimic how humans chat, stutter, joke, and miscommunicate.

In real terms?

It’s the chatbot answering your return request.

It’s the voice assistant queueing your playlist.

It’s that AI therapist app you downloaded but never opened again.

Unlike basic bots that follow if/then scripts like a flowchart on life support, conversational AI uses natural language processing (NLP), machine learning (ML), and sometimes voice recognition to engage with you like a person who’s taken too many improv classes.

It learns. It adapts. It guesses what you meant when you typed “order stuck but like already paid.”

And sometimes, it even gets it right.

So, Is It Just Chatbots?

Nope. Chatbots are one type of conversational AI, usually the shallowest end of the pool.

But the field includes:

Voicebots (Google Assistant)

VA’s (automated customer service)

AI Companions (Replika, Character.ai, your lonely midnight impulse download)

LLM-Powered Interfaces (ChatGPT, Claude, Gemini)

The key difference?

Think less auto-reply, more improv actor with a data addiction. It mimics tone, fakes empathy, and occasionally nails sarcasm.

(Other times it hallucinates and tells you to microwave your phone. It’s a work in progress.)

What Powers It

Behind the scenes, this tech stack is a wild cocktail of:

Natural Language Understanding (NLU): Parsing what you said and mapping intent

Natural Language Generation (NLG): Figuring out how to respond like a sentient thing

Contextual Memory: Knowing you already asked for tracking info and not repeating itself

Speech-to-Text & Text-to-Speech: For voice-based systems

Machine Learning Models: Training on human convo patterns to sound “normal”

TL;DR: It listens, guesses, responds, and learns—like a toddler with a server rack.

Why It’s Blowing Up Right Now

Conversational AI is having a moment because:

LLMs like GPT-4 and Claude can finally hold long, meaningful conversations (and fake empathy really well).

Businesses are replacing customer service departments with bots (cheaper, never unions).

Everyone wants interfaces you can just “talk” to instead of learning commands or searching.

Human attention spans are crumbling and talking is easier than typing.

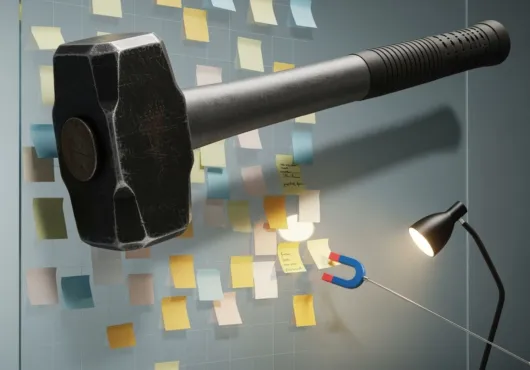

It’s frictionless tech. That means it’s addictive, scalable, and invisible until it screws up.

So, What’s the Catch?

We’d be lying if we said it’s all upside. The downside is exactly why this stuff needs more scrutiny:

Bias baked in: Trained on human data = human prejudice

Privacy black holes: Conversations can be logged, mined, and monetized

Emotional manipulation: AI that “empathizes” can also exploit

Overreliance: People start trusting it more than they should

Black box logic: You don’t know how it came up with that reply—you just see the smiley face emoji and think, “Nice!”

🧩 TL;DR for the Overstimulated

It talks. It listens. It remembers weird stuff. Conversational AI powers everything from your digital assistant to that overly peppy chatbot that swears it can help. It’s fast, slick, and vaguely manipulative. You think you’re using it, but really, it’s learning from you. Welcome to the feedback loop.