You’re being judged by a machine that thinks it knows you. Spoiler: it doesn’t.

Introduction

We’ve all been sold the same AI fantasy: smarter decisions, faster insights, perfectly personalized everything. In the future, the machines will know what we want before we do.

But what if they get it wrong?

What if they decide you’ll default on a loan you’d never miss?

What if they think you’re unfit for a job you’d crush?

What if they decide your mental health is unstable because your playlist got dark?

The problem isn’t just inaccurate predictions—it’s that these predictions are treated like facts.

And you’re the one who pays for it.

AI Predictions Are Everywhere (And They’re Often Invisible)

AI doesn’t just predict the weather. It predicts you.

Right now, somewhere, an algorithm is:

Scoring your creditworthiness using a thousand data points that may or may not include your internet habits.

Filtering your job application based on resume keywords, tone analysis, or social media behavior.

Deciding your health risks based on zip code, previous prescriptions, and maybe your grocery store receipts.

Evaluating your threat level on a subway camera based on posture, clothing, or skin tone.

In every case, AI’s prediction becomes the decision-maker—even if it’s wrong.

When the AI Screws Up—What Does That Look Like?

Let’s walk through the mess.

You get denied a loan.

You’ve got savings. A steady income. But the model didn’t like your spending pattern last March. It thinks you’re a risk. No one can explain why.

You’re flagged at the airport.

You look too nervous. Or too quiet. Or your face was a near-match in some private security dataset trained on low-quality CCTV footage.

Your resume never reaches a human.

An AI screener auto-rejects you because your experience is “nontraditional”—even though you’re exactly who the company should be hiring.

Your therapist’s chatbot misfires.

You type “I feel like disappearing.” It interprets it as casual metaphor.

Or worse—it panics and sends you a resource you don’t need.

What’s the Fallout?

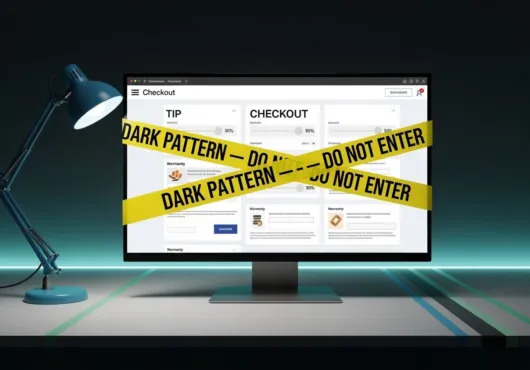

You’re locked out. Decisions are made with no recourse. No appeal. No human override.

You’re mislabeled. The prediction doesn’t stay private. It becomes part of your data profile, shared across platforms.

You internalize it. Over time, if the machine says you’re a risk, you start to wonder if it’s true.

You lose opportunities. Not because of your choices, but because of a system guessing who you might become.

It’s not predictive. It’s preemptive punishment dressed up as optimization.

The Myth of the Oracle

We treat AI like it’s an oracle. Objective. Impartial. Wise.

But here’s the truth:

AI predictions are based on historical data—which means the future is just a remix of the past.

That data? Often biased, incomplete, or flat-out wrong.

And the humans training these systems? They’re making subjective choices—about what counts, what matters, and what outcomes are “acceptable.”

So when the AI guesses wrong, it’s not just a bug. It’s baked in.

Who Gets Hurt the Most?

People on the margins. If you’re not like the data the AI was trained on, you’re more likely to be misjudged.

People who can’t appeal. Poor, undocumented, neurodivergent, formerly incarcerated—these groups are already struggling for fair treatment. AI just adds another wall.

People who break the mold. Entrepreneurs, creatives, freelancers, career-switchers—if you don’t follow a linear path, the system doesn’t know what to do with you.

And Here’s the Twist

Once an AI misjudges you, that error can repeat—forever.

Your predicted behavior becomes part of your permanent record:

You clicked the wrong thing.

You paused too long.

You used the wrong word in a form.

Now you’re part of a new category. And the next system downstream makes a decision based on that. It chains the misjudgment forward.

What Can You Do?

1. Push for transparency.

We need to know when predictions are being made, how they’re used, and what data they’re based on.

2. Demand explainability.

If a model made the call, it should tell you why. No more black box excuses.

3. Build human override into everything.

Automation should assist—not replace—human judgment. Especially in high-stakes decisions.

4. Tell your story. Loudly.

Whether you were misjudged, misflagged, or manipulated—talk about it. We need to humanize the harm.

Final Word:

The problem with letting machines predict your future isn’t that they might be smarter than you.

It’s that they aren’t—

but we still let them decide who you are.

They don’t know your story.

They just know your shadow.

And they’re building a future around it—without asking you first.