Breaking news travels fast. Way faster than you can refresh your feed, and fact-checkers are still stuck in slow motion. By the time the checkers have checked, it’s already a forgotten footnote buried under viral memes and clickbait.

In a digital arms race, the first casualty is Accuracy.

Like What You Read? Dive Deeper Into AI’s Real Impact.

Keep Reading- AI can process information fast, but it doesn’t always get it right.

- Fact checkers need to be more than just quick—they need to be informed.

- The truth doesn’t wait for a perfect system; it demands human insight and timeliness.

The Speed of Information: Faster Than The Fact-Checkers

In an age where the latest scandal, tweet, or rumor spreads in seconds, fact-checking is becoming an outdated concept. While we’re all racing to consume, fact-checkers are left chasing shadows—unable to verify the truth before the next big thing comes along.

But here’s the kicker: AI is supposed to solve this. As AI tools infiltrate the research space, more people are turning to them for real-time updates, instant answers, and even automatic fact-checking. But here’s the thing—AI doesn’t know the truth, it only knows patterns. It’s really good at regurgitating what’s been said before, but when it comes to nuance, context, or accountability, it’s all just noise.

AI Research: The Illusion of Accuracy

AI tools are hailed as the ultimate time-savers, allowing us to skip the heavy lifting of traditional research. But here’s the issue: these tools don’t actually think; they don’t analyze or question. They just pull in data and spit it out. And when they do get things wrong—which they often do—it’s invisible. No one sees it, because the AI isn’t required to explain how it reached its conclusion. The truth is buried under instant answers, but those answers don’t always match up to the reality.

With AI becoming more common for tasks like fact-checking itself, we’re entering a world where factual accuracy is secondary. And we’re okay with that. Why? Because AI doesn’t question it, so we don’t have to either.

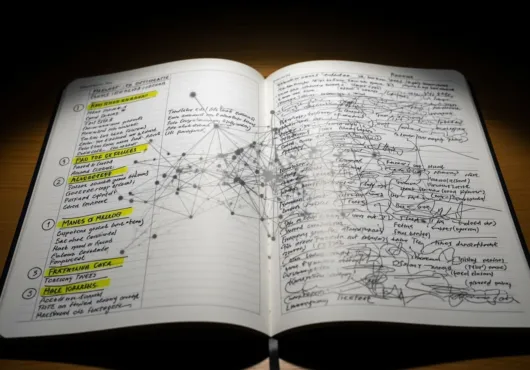

The Problem with AI Research: It Doesn’t Know What’s Missing

While AI is great at pulling together information from a million sources, it still lacks the ability to critically assess what’s missing. It’s like asking a robot to look for context—it can’t. AI is like a blunt instrument, swinging wildly without concern for what it’s breaking. Sure, it serves up data, but it misses what makes truth alive—the messy, human side of it: the story, the layers, the gray areas that make everything feel real.

And that’s where fact-checkers fill the gap. They’re supposed to be the last line of defense. But if fact-checking is already outdated, then who’s left to dig into the details? Is the AI pulling the right sources? Is the data it’s retrieving legitimate? AI won’t tell you that. It will just give you a hollow conclusion—one that fits neatly into a tweet but doesn’t stand up to scrutiny.

The AI-Driven Misinformation Machine: It’s Only Getting Worse

AI research tools might promise quick fixes, but what happens when we let machines dictate what’s true? Fact-checking has always been about making sure we aren’t misled. But as AI takes over, what’s happening is the reverse: it’s giving us the illusion of truth, without the actual foundation.

This isn’t about AI vs humans—it’s about automation vs authenticity. AI is making it easier than ever to bypass the messiness of truth in favor of speed, and what we’re seeing is a whole industry built around that shortcut. Just type in a prompt, get an answer, and done. It feels efficient, but that’s the problem: efficiency isn’t truth.

The Future of Fact-Checking: A World Where Speed and Accuracy Don’t Mix

We need better systems—systems that allow for nuance and context in real-time. But instead, we’re left in a feedback loop of quick responses, AI-generated research, and the illusion of clarity. The real question is: What happens when we outsource critical thinking to machines?

In the speed race, we’re losing the very thing we need: accountability. AI is not the problem; using AI to replace human judgment is. If we continue to rely on AI without human judgment, we’re heading toward a future where truth becomes just another byproduct of convenience—quickly processed, easily ignored.

Final Thoughts: Why AI’s Not the Final Answer

AI can handle the heavy lifting of information processing, but it doesn’t have the context to do it right. What we need is a fact-checking process that goes deeper, questioning not just what’s there, but what’s missing, and calls out the shortcuts AI might take.

Until then, we are all playing catch-up to automation at the cost of accountability.