You don’t hate AI generation. You hate being gaslit by tidy paragraphs that refuse to sweat. It’s the vendor proposal with flawless grammar and no spine. The student essay that paraphrases the universe but remembers nothing. Fine. We’re switching to receipts: rhythm, memory, source. No $49/month oracle, no moral panic—just a quick way to decide if you can trust the words in front of you. Two minutes, tops. Let’s test it.

Quick tests beat vibes: rhythm, receipts, compression, continuity, “almost right” errors.

Detectors are weather apps—stack them with real checks, don’t crown them king.

Judge traceability and intent; if it can’t show receipts, it doesn’t ship.

Proof, Not Vibes: How to Test for AI Text

You need a fast way to decide: trust it, flag it, or bin it. This is the field manual—the kind you keep open while your inbox lies to your face.

The 30-Second Field Test

(Do this first. No tools. No drama.)

Rhythm check: Read two random paragraphs out loud. If the cadence is eerily smooth but emotionally flat—like a TED Talk read by a calculator—you’ve got robot fingerprints.

Receipt poke: Ask the text for a specific source, date, page, or memory. If it coughs up “as widely reported” or a vague blob, that’s synthetic fog.

Compression test: Yank half the adjectives. If meaning collapses, you were sold shine, not substance.

Continuity snap: Swap the order of two sentences. If nothing breaks, the prose was templated, not thought.

Edge case: Look for the “almost right” detail (a wrong unit, misnamed law, off-by-one year). AI nails the vibe, misses the nail.

Receipts That Settle It

When the stakes are high (contracts, claims, research), escalate.

Source triangulation: Click two citations and run a 10-second search. Broken links or invented quotes? Case closed.

Voice mismatch: Compare against known writing from the “author.” Sudden grammar glow-up + zero idiosyncrasies = likely machine help.

Revision trap: Ask follow-ups that require lived experience (“What changed after you tried it?”). AI defaults to summary; humans default to stories.

Detectors Aren’t Oracles (Use Them Like Weather Apps)

AI detectors can be useful signals, not verdicts. Treat a “likely AI” flag the way you treat a 40% rain forecast—carry an umbrella, don’t cancel the wedding. Cross-check with the field test and receipts. If a detector says “human,” still verify claims; if it says “AI,” still look for proof. Tools catch patterns; liars read tool docs.

OpenAI shut down its text-detector (low accuracy) — definitive industry signal that raw “AI detection” isn’t reliable.

What Changed in 2025 (and Why Your Gut Feels Broken)

Style drift is trained in: Models got better at copying human tics—hesitation, side-quests, “btw” asides. Your vibe radar won’t save you by itself.

Citations got prettier: Some systems fabricate beautifully formatted sources. The link style is real; the link isn’t.

Hybrids are normal: People paste AI drafts into human edits. Don’t waste time hunting “pure AI.” Focus on verifiability and intent (was it used to deceive?).

Workflow: 2-Min Decision Tree

Skim + 30-Sec Test. If it passes with confidence → publish/accept with a note.

One red flag? Pull Receipts (source check + voice compare).

Multiple flags? Run a detector for signal stacking, then request sources or a rewrite with specifics.

Non-cooperation + bad signals? Treat as AI-assisted or untrustworthy and document the decision.

Images, Art & Photos: 1-Minute Field Test

You don’t need CSI. You need physics and receipts.

Quick sanity scan (read like a bouncer at the door):

Edges & textures. Look for rubbery skin, plastic pores, waxed fruit, “oil-slick” fabrics. Lighting math. Shadows should agree on direction and softness; reflections should carry the scene they reflect. Hands, ears, teeth. Still the Bermuda Triangle for weak AI images—extra knuckles, dental picket fences, elf cartilage. Type & symbols. Street signs, jersey numbers, logos, book spines:

AI art loves near-miss typography and gibberish brands. Geometry. Railings, tile grids, and window muntins should stay straight through the frame; watch for melt and drift. Lens reality. Does the “AI photo” show depth-of-field, chromatic aberration, sensor noise, motion blur consistent with the shutter you’d need? If not, you’re looking at a vibe, not a capture.

Receipts That Settle Image Claims

Ask for the source, not a story.

If it’s a photo: Request the original RAW (CR3/NEF/ARW/DNG), plus 2–3 adjacent frames from the same burst. Real cameras make siblings. So do humans. Single orphan JPEG is cosplay.

If it’s digital art/illustration: Ask for the layered file (PSD/Procreate/Krita) and a time-stamped WIP. Bonus: brush list or process notes.

If they say “I generated it”: Ask for prompt, sampler/steps, model/checkpoint, seed, and any upscaler pass. Generators leave recognizable dimensions (e.g., 1024×1024, 1536×2048) and tell-tale upscale seams.

Two cheap moves that pay off:

Reverse image search (multiple crops): find older postings, stock siblings, or the real photographer.

Metadata peek: Don’t worship EXIF—just compare fields logically (camera model vs lens vs ISO vs time). Fake EXIF often forgets to fake consistency.

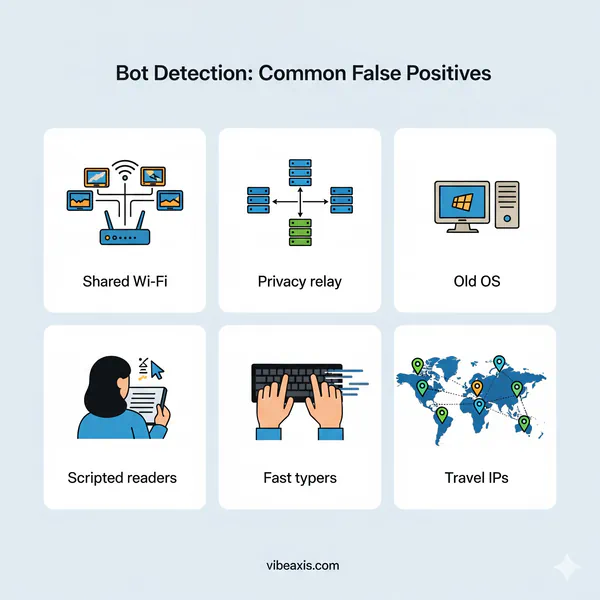

Common False Positives (Don’t Flag the Good Stuff)

Makeup, beauty retouching, and phone portrait modes can mimic “AI smooth.” Look for repeatable sequence (burst frames) and lens-realistic bokeh instead of the “cutout halo” you see in sloppy generators.

Stylized digital painting isn’t automatically AI art. Layer history and WIP shots are the tie-breaker—machines don’t have brush diaries.

FAQ

Q: Is this AI generated?

A: Maybe. Run the 30-Second Field Test, then ask for verifiable sources. If claims crumble or links ghost, treat it as AI-assisted or unreliable.

Q: Is this AI written or human?

A: Often both. Judge truthfulness and traceability, not purity. Mixed authorship that’s well-sourced beats “human” nonsense.

Q: Can detectors prove it?

A: No. They estimate. Use them to stack signals, not to deliver a verdict in handcuffs.

Q: How do I check for free?

A: Field Test → search two claims → request one concrete receipt (URL, page, timestamp). If you get smoke, you’ve got your answer.

Is this an AI image?

Run the physics test (lighting, reflections, geometry), check hands/teeth/type, then demand a RAW or layered file. If they can’t produce a source or matching sequence, treat it as AI-generated.

Is this AI art or human-made?

Ask for layers and WIP with timestamps. Human art has process receipts; AI art has prompts and seeds. Both can be legit—credit and label based on proof.

Can metadata prove a photo is real?

No. EXIF can be forged. Use it to catch inconsistencies, not to crown a winner. Proof lives in RAW + adjacent frames + context.

Policy sanity check (for classrooms & teams)

Make your rule the outcome, not the tool: “If you can’t show sources or explain your process, it doesn’t ship.” That catches AI fakery and human bluffing with the same net—and rewards people who do the work.