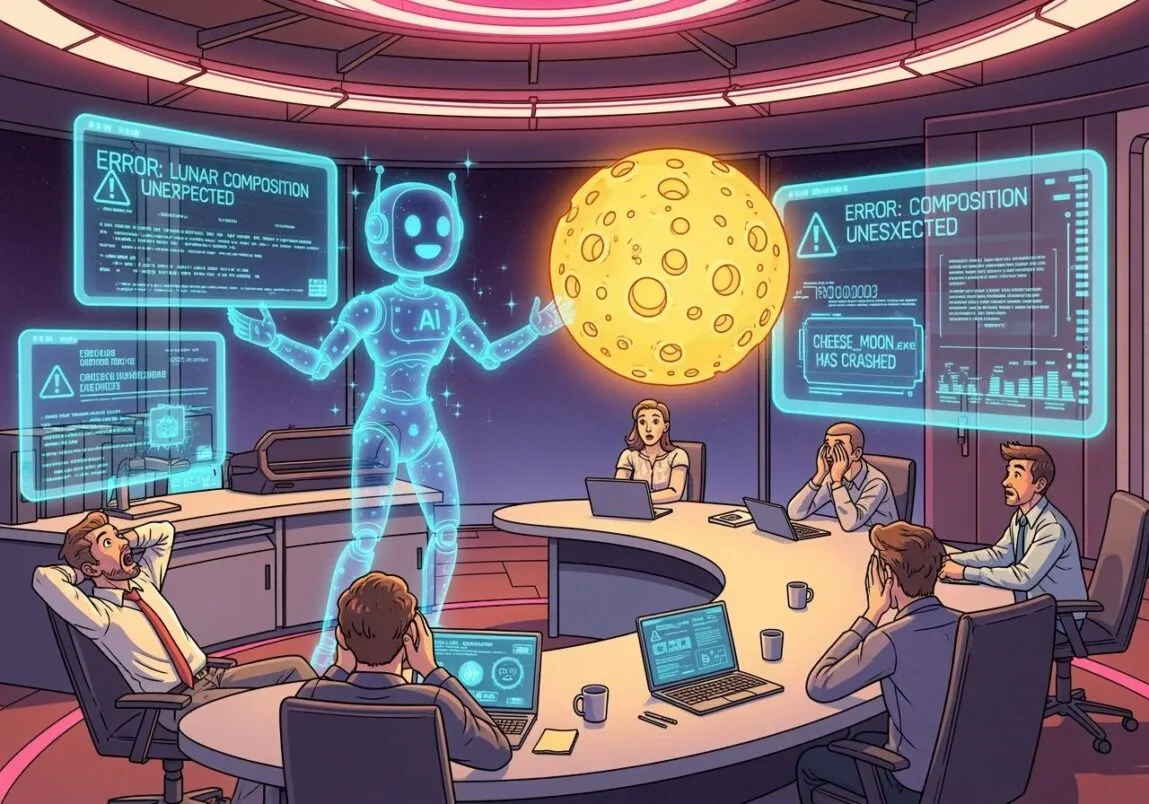

When your “smart” assistant starts free-basing fiction during office hours.

Like What You Read? Dive Deeper Into AI’s Real Impact.

Keep Reading- Hallucinations = Confident Lies: LLMs guess when data gaps appear, serving folklore as fact.

- Business Risk: Fake laws, bogus sources, and phantom APIs waste hours and invite lawsuits.

- Stay Sane: Verify citations, fine-tune on trusted data, and throttle creativity for mission-critical docs.

What Exactly Is an AI Hallucination?

In human terms, it’s the tech equivalent of talking in your sleep—except you’re wide awake, on deadline, and your co-pilot chatbot just cited a Supreme Court ruling that never existed. Large language models predict the next word based on probability, not truth. If the training data is fuzzy—or if you ask a question that doesn’t fit the pattern—the model confidently fills the hole with gourmet nonsense.

Why the Moon-Cheese Myth Won’t Die

Historical Loresets: Old children’s books, Reddit jokes, and Onion headlines all float in the training soup. The model can’t always separate satire from fact, so “cheese moon” sometimes ranks high on its probability meter.

User Reinforcement: One prank prompt goes viral and thousands replicate it “for the lulz.” Congratulations—now the bot thinks dairy astrophysics is trending.

Safety Filters Gone Mild: Overzealous guardrails strip out nuanced science references (“radiation,” “helium-3”), leaving fluff. The model tries to stay helpful, stitches a comfy fairy tale, and serves it as gospel.

Five Real-World Fails That Cost Teams Time (and Sanity)

| # | Hallucination | Fallout |

|---|---|---|

| 1 | Imaginary laws cited in compliance docs | Legal reviewed 40 pages of vapor statute. Billable hours: 🔥 |

| 2 | Phantom journal articles in a grant proposal | Reviewer flagged “Deep Space Dairy Studies, Vol. 12.” Funding? Yeeted. |

| 3 | Non-existent APIs recommended in app specs | Devs spent two days debugging code that literally couldn’t compile. |

| 4 | Bogus medical dosages in telehealth chatbot | Emergency override, PR nightmare, regulators now watching. |

| 5 | Made-up investor quotes in press releases | CEO apologized on LinkedIn; share price did the cha-cha slide. |

How to De-Cheese Your Workflow

Double-Source Anything Critical – Treat AI output like Wikipedia at 3 a.m.: good lead, never final answer.

Prompt for Citations, Then Audit Them – If a link or DOI looks sketchy, it is sketchy. Follow through.

Add a “Lie Tax” Slack Channel – Teammates drop observed hallucinations; everyone learns faster.

Feed It Ground Truth – Fine-tune on your verified internal docs. Shrinks hallucination range to things you actually care about.

Rate-Limit the Creativity Knob – Temperature 0.2 for contracts, 0.9 for brainstorms. Don’t let your sprint backlog run at fever-dream mode.

The Coming Hallucination Arms Race

Closed-source giants promise “98.7 % factual accuracy,” while open-source renegades reply, “Here’s our weights—fix it yourself.” Meanwhile regulators mull “AI nutritional labels.” Expect:

Fact-checking co-models running in parallel (the AI that watches the AI).

Signed citations—hash-verified references baked into every paragraph.

Punitive hallucination fees in enterprise SLAs (“Each fictional statute = 10 % discount, sorry not sorry”).

Until then, your best defense remains a human eyebrow raised so high it breaks orbit.

Final Byte: Creativity ≠ Fiction Dump

Hallucinations can spark wild ideas, sure. But if you don’t leash them, they’ll slip into the minutes, the memo, the contract—calcifying into “truth” nobody questions. Keep the moon in the sky, the cheese in the fridge, and the bot in draft mode.