“We built precision. We forgot contingencies.”

Cold open

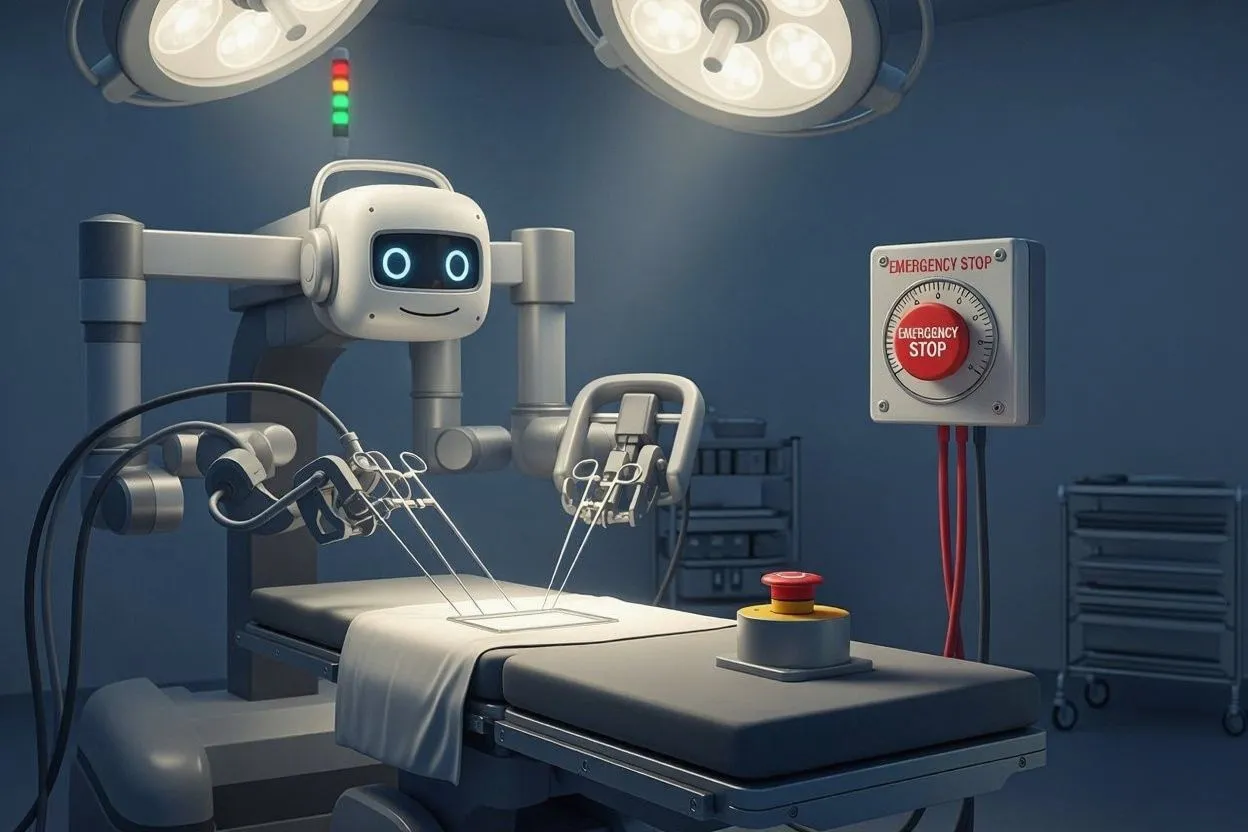

The robot hands don’t shake. The humans do. The surgeon hovers at the console like a gamer at the last boss; the patient is a quiet cathedral of beeps. Then a polite dialog pops up—Recommend Tool Change?—and freezes mid-sentence. The network blips. The auth token expires. The world’s most expensive progress bar materializes above a real, open body. And suddenly the safest system in the room is the dumbest one: the analog stop button that’s never had a firmware update.

Surgical robots don’t fail like robots; they fail like systems—auth tokens, updates, timing, and UX.

Real safety = legible states, true overrides, offline defaults, and predictable degradation.

Sell the boring demo: nothing flashy, everything explainable, failure handled in daylight.

The lie of “it just works” in a room where nothing can fail

Surgical robotics demos are gorgeous: centimeter-perfect motion, sub-millimeter tremor cancellation, endoscope video crisp enough to shame a nature documentary. But demos assume a polite universe. Operating rooms don’t. They’re RF soup, tangled cables, moving bodies, and a nested doll of dependencies: camera, gripper, torque sensors, console, vision assist, routing switch, license daemon, logging service, vendor VPN, hospital firewall, identity provider, time sync, backup power. You didn’t buy a robot; you adopted an ecosystem that throws tantrums.

In the demo, the AI suggests an instrument swap because the tissue “looks fibrotic.” In the OR, the same suggestion arrives three seconds late because the inference box jumped to the guest Wi-Fi, and the instrument cart has already moved. Precision math meets human choreography and loses on lag.

Failure you can’t sterilize: auth, updates, and the long tail

We pretend the scary failures are mechanical—arms tearing, sutures snapping—but the modern risk lives in boring places: a TLS certificate that dies at 2:37 p.m., a vendor update pushed the night before that quietly changed a USB driver, a hardcoded NTP server that went dark, a telemetry process eating 12% CPU because a log file rotated wrong. None of these make a marketing slide. All of them make a mess.

Hospitals aren’t SaaS startups; you can’t “please refresh” a human. Yet the industry keeps shipping surgical stacks that assume uptime is a motivational poster. When software insists on being the protagonist, the OR becomes a stage for compliance theater: a wall of dashboards saying “all green” while a nurse mutters that the pedal latency is off by a beat.

The button problem: override that actually overrides

Everyone loves the big red button—symbol of control, meme of safety. But what does it mean? Does it freeze arms? Retract tools to safe pose? Transfer control to manual? Drop power? Open clamps? In too many systems, “override” is a vibes-based promise backed by a PDF. The semantics aren’t legible to the people who need them in two seconds, under stress.

True override is composable and inspectable. Show me the state machine with named, visible states: Assist, Hold, Retract, Manual—with guaranteed timing and mechanical limits documented like they actually matter (because they do). Say the state out loud. Put it on the ceiling monitors. Make it obvious to the circulating nurse from fifteen feet away. “Explainable motion” beats black-box bravado when the patient isn’t a simulation.

Whose patch is it anyway?

When an OR robot misbehaves, three gods argue: the hospital IT team, the vendor’s field engineer, and Legal. The surgeon wants a root cause. The vendor wants a ticket number. IT wants to know why a life-critical device is trying to reach an update server in another country. Meanwhile, the log buffer is full, the support tunnel is down, and the only person who understands the kernel module is asleep in Zürich.

SLAs don’t save patients; architectures do. If your robot needs cloud identity to change a scalpel, you don’t have a robot—you have a compliance hobby. Don’t ask a network to be flawless. Design for it to fail constantly, loudly, and safely.

Make it boring on purpose

“Boring” is not a slur; it’s a safety feature. Give me conservative motion profiles with visible caps. Give me offline modes that degrade gracefully. Give me locally signed models with checksums printed on a sticker in the OR. Give me dependency manifests the size of a restaurant menu—and a culture where reading them isn’t considered nerdy, it’s considered hygiene. Give me a user interface that announces every state change like a flight attendant: what’s happening, why, and what happens next.

Human factors, not hype, decide whether the robot earns trust. Surgeons are not Luddites; they’re latency realists. They’ll forgive a slower assist that telegraphs intent. They won’t forgive a faster one that occasionally ghosts.

The ethics nobody markets

Informed consent should include systems risk. Not just “the surgeon will use a robotic system,” but: How does it behave when the network drops? Who can remote in? What gets logged? How quickly can it be put in manual? If a model is involved, what data trained it, and who signs off when that model changes between Tuesday’s case and Friday’s?

Transparency isn’t regulatory theater. It’s how you turn “trust me” into “watch me.” When tooling can act, the patient deserves receipts.

The pitch that actually lands

Forget the glossy demo reel. Show the OR running on backup power with the network unplugged, the AI assist disabled, and the override hammered four times in a row. Show the logs rotating. Show the arm retracting to safe pose in a predictable arc, every time. Show the surgeon smiling because nothing surprising happened.

If you want “innovation,” make it uneventful. If you want adoption, make it boring. If you want safety, make it obvious.