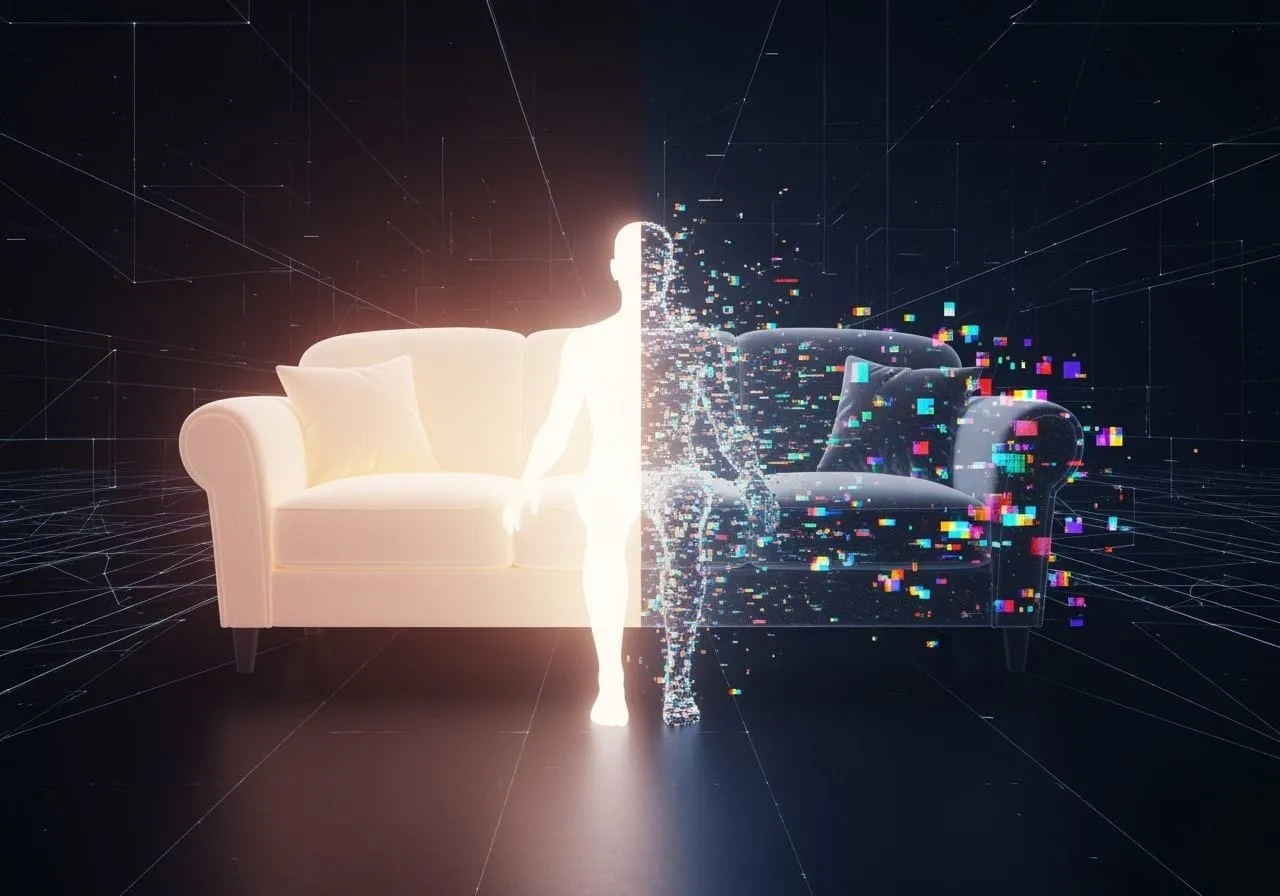

You are not who you think you are—at least not online. While you sleep and scroll—mindlessly clicking, passively consenting—AI is busy behind the curtain. Building a version of you from fragments: what you watch, what you buy, what you like, what you forget. A digital replica with your name, but none of your nuance. A ghost in the machine who wears your face but dances to the algorithm’s beat.

This isn’t science fiction. It’s surveillance capitalism on auto-pilot. Your digital doppelgänger already exists—and it’s being sold, judged, and weaponized without your permission.

What Is a Digital Doppelgänger?

A digital doppelgänger is not just your data—it’s a dynamic, AI-shaped simulation of your behavior, choices, and likely future actions. Think of it as:

Your predicted buying patterns

Your estimated beliefs

Your simulated personality

Your algorithmically-rendered face and voice (in deepfake territory)

It’s not you. But it acts like you.

And most importantly—it’s used in your place.

🔍 Where It’s Already Happening:

1. Targeted Advertising

You’re not being shown ads for who you are now, but for who your doppelgänger might become.

“We noticed you clicked on one candle—so here’s a gendered spiritual rebrand and $400 worth of witchcore decor.”

2. Facial Recognition Databases

Your face is scanned, stored, and compared to datasets used by police, government, and private tech.

You might be misidentified as a suspect, or matched with someone else’s crime, because your digital twin doesn’t look too different.

3. Voice + Behavior Cloning

Companies are capturing your tone, cadence, even your typing patterns to train AI models. Some even let strangers replicate your voice to say things you never would.

Your digital clone might pitch products, read bedtime stories, or scam your relatives in a robocall from “you.”

4. Predictive Profiles

Loan approvals, job screenings, or government flagging systems may use AI to forecast how “risky” or “reliable” your behavior might be—based on people like you.

It’s not discrimination if it’s just probability, right? (Wrong.)

How It Works (In Messy Reality)

- You generate data—constantly.

- That data gets stored, sold, and fed into machine learning models.

- Models use it to simulate what someone like you would do, buy, say, or think.

- That simulated version gets used to:

- Nudge you (recommendations, pricing, manipulation)

- Replace you (in customer service, voiceovers, creative fields)

- Judge you (through opaque risk-scoring systems)

You don’t have access to this version. But companies do.

And they trust it more than they trust you.

The Problem With Being Predictable

Your digital twin doesn’t care about your nuance. It can’t see context. It doesn’t know you’ve changed.

It’s built from you, but flattened—datafied and repeated so often it becomes your algorithmic shadow.

You think you’re in control. But your doppelgänger is the one doing the talking, the buying, the convincing—and sometimes, the deciding.

Why This Should Freak You Out (Just a Little)

Consent is broken. You never agreed to be cloned—just to “enhance your experience.”

Bias is baked in. These systems learn from flawed data, meaning your doppelgänger might be racist, sexist, or just plain wrong about you.

Ownership is nonexistent. You don’t own your digital twin. But others can profit from it.

Reputation risk is real. Deepfakes, fake reviews, voice clones, synthetic media—any of it can damage your real-world identity.

You Are Not the Only You Anymore

The real danger isn’t just what AI knows—it’s what it imagines about you.

And that imaginary version is slowly becoming more visible, more profitable, and more influential than the flesh-and-blood human typing this sentence.

So What Can You Do?

- Minimize exposure. Use privacy tools, opt out when you can, and stop oversharing like every click isn’t currency.

- Demand regulation. Push for laws that address deepfakes, data ownership, and biometric privacy.

- Tell people. The more we drag this into the sunlight, the less power it has.

- Stay unpredictable. Don’t be easy to model. Mess with your own data trail. Confuse the feed.

Final Thought:

You are no longer just a user.

You are the product, the prototype, and the ghost in someone else’s machine.

And your digital doppelgänger? It’s not evil. It’s just… useful to someone else.

Not to you.