More tokens ≠ more truth.

You paste fifty pages into your shiny AI and it nods like a therapist who bills by the paragraph. Two minutes later, it confidently invents a citation from a book you didn’t include. That’s not memory— that’s improv with better lighting. The “context window” is just whatever the model can see right now. It forgets the instant the call ends unless you store it somewhere.

Context window ≠ memory; it’s a disposable sandbox.

Bigger windows still hallucinate—attention dilution and conflicting instructions don’t disappear.

Store state yourself; use retrieval + inline sources for answers you can back up.

The Mix-Up: Memory vs. Temporary Glue

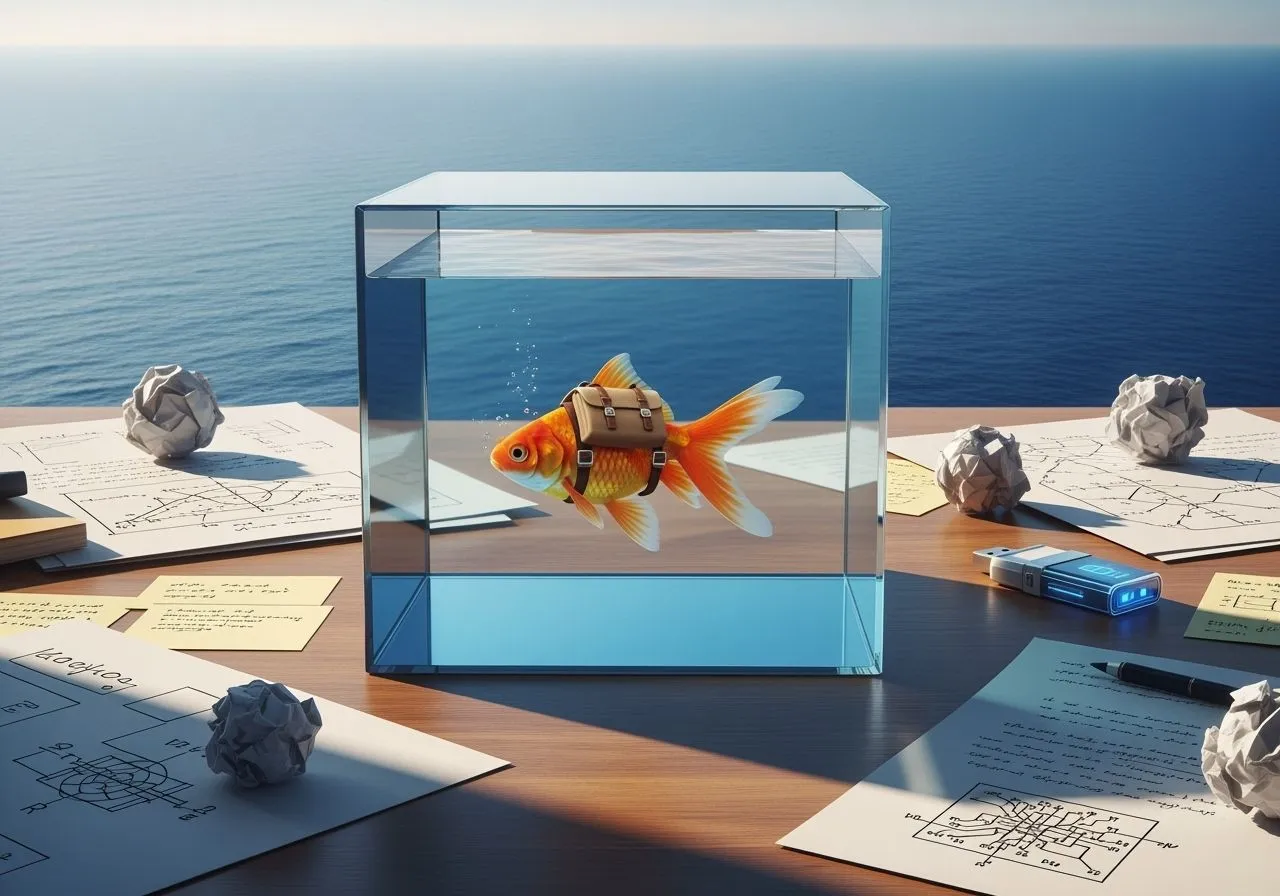

The context window is a sandbox: prompt + history + attachments. Real memory is persisted state you can reload tomorrow. Without that, the model is Dory from Finding Nemo—charming, helpful, unwell at retention. Calling it “memory” just sets people up to trust vibes over receipts.

Why Bigger Windows Still Hallucinate

Give a model 200k tokens and it’ll happily swim in it. That doesn’t mean it understands all of it. Attention spreads thin, instructions conflict, and the model will resolve ambiguity the fastest way it knows: by making plausible nonsense. You fed it an ocean; it drank a mirage.

What You Actually Want: State + Receipts

You don’t need “infinite memory.” You need curated facts and verifiable sources the model can fetch on demand.

- Minimal working setup Chunk your source docs (small, overlapping).

- Index them (embeddings or keyword—yes, defaults are fine).

- Retrieve only the top-k relevant chunks per question.

- Paste those with inline citations and force the model to answer only from them.

The Red Flags to Watch

If your assistant “remembers” personal details with no profile store, it’s bluffing. If it answers beyond the provided sources, that’s not genius—it’s a costume change. If it gets better after you copy the answer into the prompt… congrats, you are the memory.

A Simple Falsifier (Prove Me Wrong)

Run 100 Q&As with ground truth. Same model, small window vs. huge window, no retrieval, no tools. Count fabricated sources. If hallucinations fall to nearly zero only by enlarging the window, I’ll eat my hoodie. Otherwise, accept that “more tokens” just delays the moment the model confesses it’s guessing.

The Honest Pattern (That Scales)

Give the model less—but relevant—and chain it to receipts. Keep a tiny user profile for persistent facts (name, preferences). Everything else comes from fresh retrieval. That’s how you get answers you can defend, not just admire.