“You weren’t rejected by a landlord. You were ghosted by a gradient.”

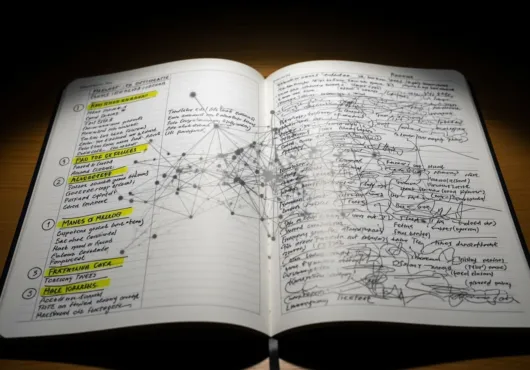

You tour the place, smile at the dog, pretend the water pressure matters. A week later: “We’ve decided to move forward with other applicants.” The leasing agent didn’t decide anything. A risk model did. It skimmed your data exhaust—credit shadows, old addresses, a background-check roulette—and declared you a flight risk with the moral fiber of a raccoon.

You weren’t denied by a person; a risk model flagged your “predictability” as low and everyone hid behind it.

The stack rewards sameness and punishes real-life variance with proxy-biased “safety” metrics.

Win by pre-bundling proof, demanding specific adverse factors, and forcing a manual review where a human is accountable.

The landlord isn’t reading your application—an engine is

Most big complexes outsource the yes/no to vendor stacks: tenant-screening APIs, “eviction propensity” models, background checks that “summarize” you like a bad biography. There’s a dashboard somewhere with red/green dots and a slider called “tolerance.” Your life became a knob.

Risk scoring as vibes (and it loves the wrong patterns)

What actually bumps your score? Patterns that look safe to a spreadsheet: long, boring sameness. Same job, same bank, same address, same last name on the mailbox. Models don’t reward resilience; they reward predictability. They learn that “stable” looks like old money routines and penalize anything that smells like real life: contract work, roommate churn, a hospital bill that went feral for six months.

Background-check fan fiction

Background reports are stitched from databases with uneven timestamps. One typo, one stale record, one data broker that thinks your middle initial is you—but with a misdemeanor in a county you’ve never visited—and suddenly you’re “further review.” The “dispute” button exists, but the SLA is basically geologic time measured in Tuesdays.

Disparate impact in a hoodie

Nobody says “no [protected class]” out loud. They say “fraud likelihood,” “neighborhood fit,” “payment stability,” and let proxies do the dirty work. ZIP code becomes a stand-in for race. Prestige employer becomes a stand-in for class. Commute distance becomes a stand-in for family structure. The model smiles: neutral inputs, biased outputs.

Appeals are a maze by design

Try to appeal and you’ll meet The Policy: We can’t share proprietary criteria. Translation: you may be innocent, but the vibes are guilty. They’ll offer “manual review,” which is just a human staring at the same red dot the machine made.

Red flags in the wild (spot these tells)

“We use an independent screening service.” Independent like a mall cop with a tank. Expect rigid thresholds masquerading as “standards.”

“Pre-qualification in minutes.” Minutes to compute ≠ minutes to correct. Fast is friendly only for approvals.

“No cosigners accepted.” Means the system likes its binary: pass/fail, no nuance.

“Dynamic pricing + instant decisioning.” If they yield-ratchet the rent, they automate the gatekeeping.

Countermoves: how to fight a phantom

Pre-bundle your boring: Paystubs, bank letters, offer letter, landlord verifications. Force the “manual override” option to stop being extra work.

Run your own shadow report: Pull credit + tenant-screen copies from major vendors where possible; dispute anything spooky before you apply.

Break the automation script: Ask (in writing) for the specific adverse factors that triggered denial and request a manual review with documentation attached. Repeat their phrasing back to them.

Flip the optics: A short, calm cover letter that translates volatility into reliability (e.g., contract worker with annualized income + retainer proof) often gives a human permission to override the red dot.

Shop for policy, not granite: Smaller owners with imperfect websites often rely on human judgment. Less “Apply Now” glitter, more actual conversation.

The quiet tax on “mobility”

The pitch for algorithmic leasing is efficiency. The effect is rationing. If your life isn’t a straight line, the system makes your rent more expensive in time and in options. It’s a vibe prison dressed as convenience: “Apply anywhere with one click”—as long as you look like last year’s winner.

Landlords are outsourcing courage

This isn’t only a tenant story; it’s a property story. The machine becomes the landlord’s alibi. “It’s not me, it’s the standard.” Standards are great when you can interrogate them. When you can’t, they’re just decor for fear of variance. We built a society that punishes surprise and then wonder why the only people who get in are the least interesting.

What a sane system would do (and refuses to)

A sane system would weight trajectory over snapshot. It would accept evidence that contradicts data brokers. It would audit for proxy bias monthly, not when the attorney general sends a love letter. It would assume false negatives are costly humans, not acceptable waste.

Until then, treat leasing like a boss battle with invisible hitboxes. Bring receipts. Force daylight. Translate your life into the model’s love language—predictable cash—and then demand a human adult presses the literal override.